Reducing our AWS bill by $100,000

technical Jack Ellis · Jan 22, 2024By popular demand, I’m back with another technical blog post. Who am I kidding? I love writing these technical blog posts, and in this post, I will go through everything we did to cut $100,000/year off of our AWS bill.

Setting the stage

One of our goals for 2024 was to optimize our infrastructure spending because it had been rising fast as our business grew. We’re an independent company with zero outside funding, and we stay in business by spending responsibly. In addition, we have a handful of other areas that we want to invest in to deliver even more value to our customers, and we were overspending on AWS.

We decided to focus on only our ingest endpoint, which handles billions of requests a month because that’s where all the money was being spent. We use Laravel Vapor, which deploys Laravel applications to AWS, and we were utilizing the following services:

- Application Load Balancer (ALB)

- Web Application Firewall (WAF)

- AWS Lambda

- Simple Queue Service (SQS)

- CloudWatch

- NAT Gateway

- Redis Enterprise Cloud

- Route53

We use other services to run our application, but these were the areas of attack for reducing costs on AWS.

The flow of the ingest endpoint was a CDN (not CloudFront) -> WAF -> ALB -> Lambda -> SQS -> Lambda -> [PHP script which utilizes Redis and SingleStore] -> Done -> Add to CloudWatch logs.

With that out of the way, let’s get into the specifics of how we reduced cost.

CloudWatch

Savings: $7,550 per year

We’re starting here because this was the first area I addressed. I am moderately furious at this cost because it was pointless and purposeless spending.

For a long time, I was convinced that the cause of high CloudWatch costs was because Laravel Vapor injected lines like “Preparing to add secrets to runtime” as log items. But that was removed, yet the pointless CloudWatch costs were still occurring.

I looked deeper, and it turns out that we were spending $7,000/year for these pointless logs.

And, if you’re thinking, “These aren’t pointless; we actually use these for debugging,” then great, this isn’t a wasted expense for you. We don’t use these; we use Sentry to profile performance and catch errors.

After seeing that, I went down the rabbit hole and found tutorials on what I could do. I cannot find the specific articles I used, but I’ll write the steps you can take to stop Lambda from writing these pointless logs. I will tailor this to Laravel Vapor users, but it will work the same for you if you’re using Lambda.

- Go into IAM

- Click on Roles

- Search for laravel-vapor-role (This is the role we use for running Lambda functions. If you’re not sure what role you use, open up your Lambda function, go to Configuration, then Permissions, and you’ll see the execution role)

- Click on the role

- Click into the laravel-vapor-role-policy policy to edit it

- Remove the logs:PutLogEvents line from the policy and save the changes

- Go into Lambda

- Click the name of your function (note: you’ll need to complete this for every function)

- Click the Configuration tab

- Click the Monitoring and operations tools link on the left

- Under the Logging configuration section, click the edit button

- Expand the Permissions section

- Untick Add required permissions

- Save it

Note: As of 20th January 2024, there is a bug in the Lambda UI which ticks the "Add required permissions" option despite you having it off. Until this is fixed, I advise that once you're on the Edit logging configuration page (after step 11), refresh your browser. That'll give you the accurate state.And that’s all you need to do. But the best part is that you keep your Lambda monitoring. You know that Monitor tab within your function that has all those useful graphs? You still get that as part of AWS Lambda. Fantastic news.

In addition to the above, if you already have a ton of CloudWatch logs or plan to use them in some capacity, go into CloudWatch and set a retention policy on your log group. By default, there isn’t one.

NAT Gateway

Savings: $17,162 per year

Up next is this fun little toy. A toy that has stung plenty of people in the past. I didn’t think much of it when I added a NAT gateway to our service. Sure, I knew there’d be extra costs as we moved towards going private, but I was comfortable with some of our workload going over that NAT gateway. After seeing vast amounts of data being moved across it, I admitted I was wrong.

We now use a NAT gateway to communicate with the internet from within a private subnet in AWS. The only things that go over this gateway are things like API calls to external services. All database interactions are now done via VPC Peering (Redis) or AWS PrivateLink (SingleStore).

We route through private networking to increase speed and improve security. It’s an absolute bargain as far as I’m concerned.

S3

Savings: $5,774 per year

This one was quick. We had versioning turned on in one of our buckets. And it was a small UI area which I had missed entirely. So, if your S3 cost is through the roof and it seems a bit unreasonable, check your buckets. If a “Show versions” radio input appears above the table, that’s the cause. We deleted our historical data there and were laughing.

I hope this little S3 note helps somebody out there. But let’s move on.

Route53

Savings: $2,547 per year

Another quick and easy one. And this is still a WIP. No, we didn’t secure colossal cost savings, but we still don’t want to waste money.

Route53 bills you for queries. DNS resolvers will cache records based on the TTL value of the DNS entry. But if you set the TTL too low on your DNS record, it will mean that the cache will (well, SHOULD, on modern DNS resolvers) invalidate the value of the DNS record and request it from Route53 again. This costs you money each time.

The solution is to increase the TTL on records you know won’t need changing for a while or in an emergency. AWS doesn’t charge for alias mapping either.

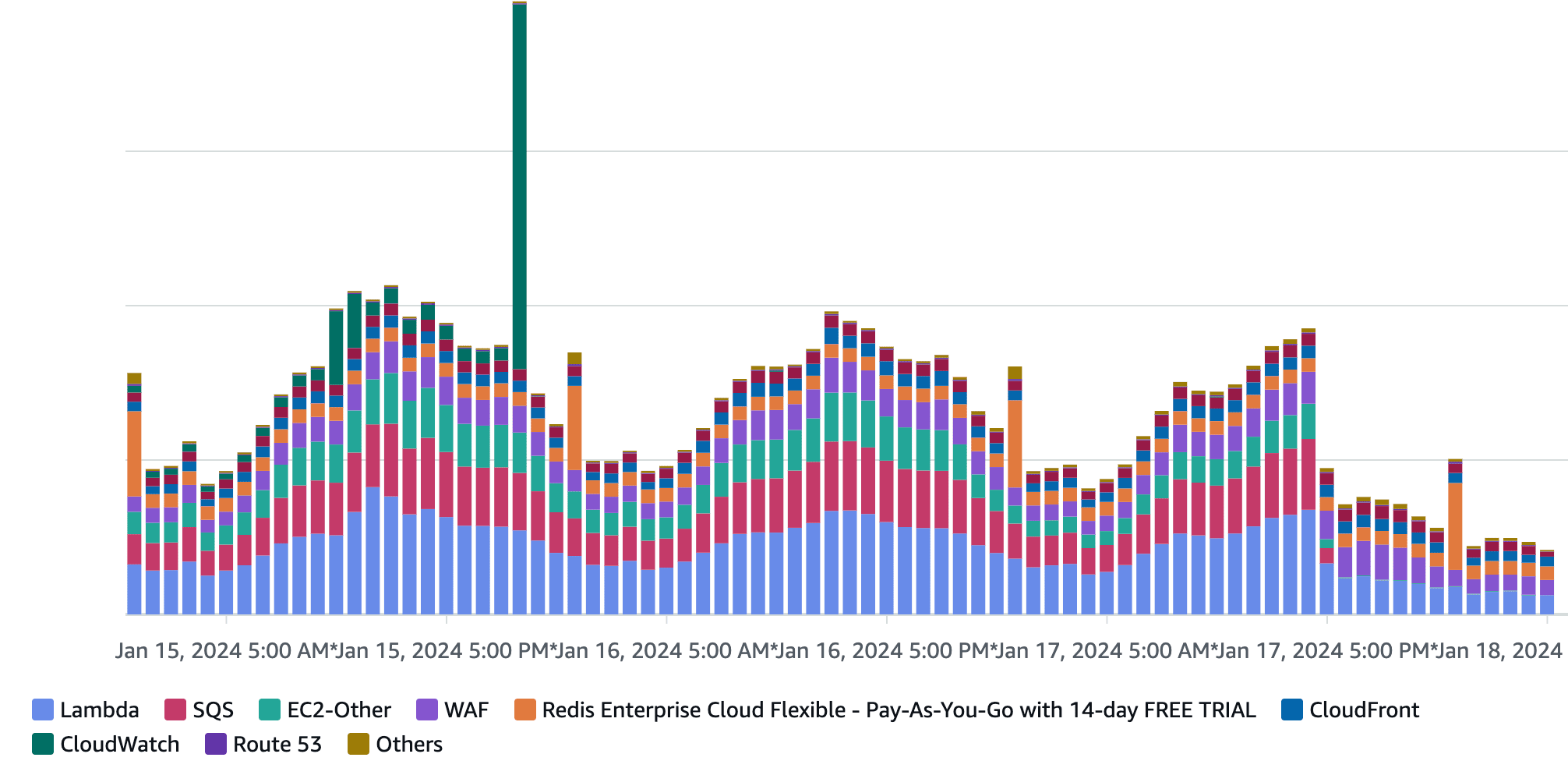

Lambda & SQS

Lambda savings: $20,862 per year SQS savings: $23,989 per year

Now, let’s get into where we’ve really cut costs. Up until recently, we were doing Lambda -> SQS -> Lambda, and this felt pretty good. After all, we wanted resilience, and, when making this decision initially, our database was in a single AZ, so we had to use SQS in-between because it was a multi-AZ, infinitely scalable service.

But now we’ve built our infrastructure where we have our databases in multiple availability zones, so we just don’t need SQS, and it’s instantly dropped our Lambda cost.

This is happening because we’ve cut the Lambda requests in half and introduced the following changes:

- There is now only one Lambda request per pageview instead of two

- The average Lambda duration on the HTTP endpoint has decreased significantly since we’re no longer putting a job into SQS, we’re simply hitting Redis and running a database insert (each of these operations takes 1ms or less typically)

- We are still using SQS as a fallback (e.g. if our database is offline), but we’re not using it for every request.

- We are no longer running additional requests to SQS for each pageview/event

This obviously wouldn’t work for everyone since most people use their queue for heavy lifting, but we don’t do heavy lifting in our ingest. In fact, our pageview/event processing is absolutely rapid, and our databases are built to handle inserts at scale.

WAF

Savings: $12,201

At the time of writing, this is a work in progress. We are planning on moving away from using AWS’ WAF for security on our ingest endpoint and moving to our CDN provider’s per-second rate limiting instead. The cost is included in what we pay, and we prefer a per-second rate limited to five minutes. We aren’t using WAF for bot blocking (e.g. scrapers) and actually do that in Lambda (customers will find out why soon; watch this space).

CDN providers such as Bunny.net and cdn77.com offer very competitive pricing to CloudFront and offer solid reliability. Whilst I don’t recommend using Bunny for custom domains at scale, they have been a reliable vendor for us and many people in my network.

For our use case and scale, CloudFront just isn’t economical. The reason why we only had to raise our entry-level pricing by $1 was because we knew we could get our costs down and not have to charge our customers lots more. Comparatively, we’ve seen others in the analytics space hike their prices up tremendously. But I digress.

CloudFront

Savings: $4,800 per year

For CloudFront, we had a good lesson on how the Popular Objects section works. You can go into CloudFront -> Popular objects, choose a distribution and establish where you may be bleeding.

We’ve been spending $4,800 per year on EU (Ireland), and I just ignored it. Perhaps we were somehow getting more EU traffic than ever before. But it just didn’t make sense, considering the rest of the world was only costing $488 per year.

Well, long story short, we did some digging, and our EU isolation infrastructure currently sends our main servers over 100 million requests a month. We have been moving around 4 TB of data per month. This is to achieve the sync of blocked IPs that customers add to their sites, so it’s somewhat expected, but we can easily remove this cost.

My advice is to really think about whether CloudFront is worth the cost if you’re running it at scale. As I said above, there are other options available, and they are fantastic. But if you’re using CloudFront and you want to optimize spend, use that Popular Objects section to see if there’s any way you could offload certain assets to a cheaper CDN.

Note: I only included the cost of CloudFront for this excess cost that had slipped by us, not for serving our dashboard/API.

Everything else

Our savings total up to $94,885. But now let’s do some magic with that figure:

- $97,731.55 after we add 3% for Support (developer) package from AWS

- $109,459.34 after we add 12% for GST & PST (I appreciate sales tax is recoverable but wanted to include it for full context)

So, the work here has saved us $109,459 USD per year (minus returned sales tax). All of this was needed because we are going to be introducing new features that would drive these costs up.

As I said earlier, we’re an independent company and every dollar matters. Our goal is not to IPO or become the next big unicorn; our goal is to provide the best possible alternative to Google Analytics for our customers and survive for the long term. And, in all honesty, we’d rather continue to invest in our analytics database software, which does a ton of heavy lifting, than pointlessly give the money to AWS for things we don’t need.

I hope this article is helpful. Please let me know if this blog post helped you save money on your bill.

P.S. The discussion on Twitter/X is here

You might also enjoy reading:

BIO

Jack Ellis, CTO

Recent blog posts

Tired of how time consuming and complex Google Analytics can be? Try Fathom Analytics: