Someone attacked our company

news Jack Ellis · Dec 14, 2020At the start of November, someone decided that they would try to destroy our company.

They subjected us to multiple, malicious, targeted DDoS (Distributed Denial-of-Service) attacks over two weeks. They intended to damage the integrity of our customers’ data and take our service offline. This attack wasn’t random and it wasn’t just your typical spam. This attack was targeted at Fathom and was intended to put us out of business.

But why would someone do this?

Smooth sailing

The last few years have been full of hard work. We’d had our fair share of challenges, dealing with an abundance of compliance law, scaling huge amounts of analytics data, and so much more, but we’d never been attacked maliciously.

Since we incorporated Fathom Analytics as a business, we’ve been in a fortunate position where we could spend the majority of our time improving our software and supporting our customers. Outside of that, we’d work on marketing, legal, accounting, and all the other pieces needed to grow a business.

In the early days, Fathom was running on a few thousand small websites. But Fathom is not a hobby project, and both of us have been full time on it for a while now. We’re no longer the new kid on the block. We’ve proven ourselves time and time again, and we’ve become the leading Google Analytics alternative. These days, Fathom is used by some of the largest companies in the world, and we’ve become established in the analytics space.

Fathom has been very successful. And whilst we’ve always worked hard, the journey has been a relatively smooth one. We spend our days doing what we love: building software for the best customers in the world. When we say “best customers in the world”, we don’t say this as a gimmick. Before writing this article, we emailed all of our customers about the DDoS attack we’ve been subjected to and every single reply was full of support and love. We are so unbelievably lucky to have such incredible customers.

Too good to be true

We were really on a roll. We’d just landed our first big guest for our podcast, DuckDuckGo, and we had made some huge advancements on Version 3. Behind the scenes, great things were happening. We were building innovative new solutions and getting so many new customers coming through the door every single day. Everything felt very in-sync.

But then some customers started tweeting & emailing us saying their dashboards had been hit with gigantic spam attacks.

It turned out that a handful of our customers had been hit with spam attacks, making their dashboards impossible to read. At the time, we didn’t think too much of it. Referral spam is a problem all analytics companies face, and it has happened a few times before over the last few years. We cleared the data out, our customers were happy again and we were free to get on with our lives. Or so we thought.

5th November

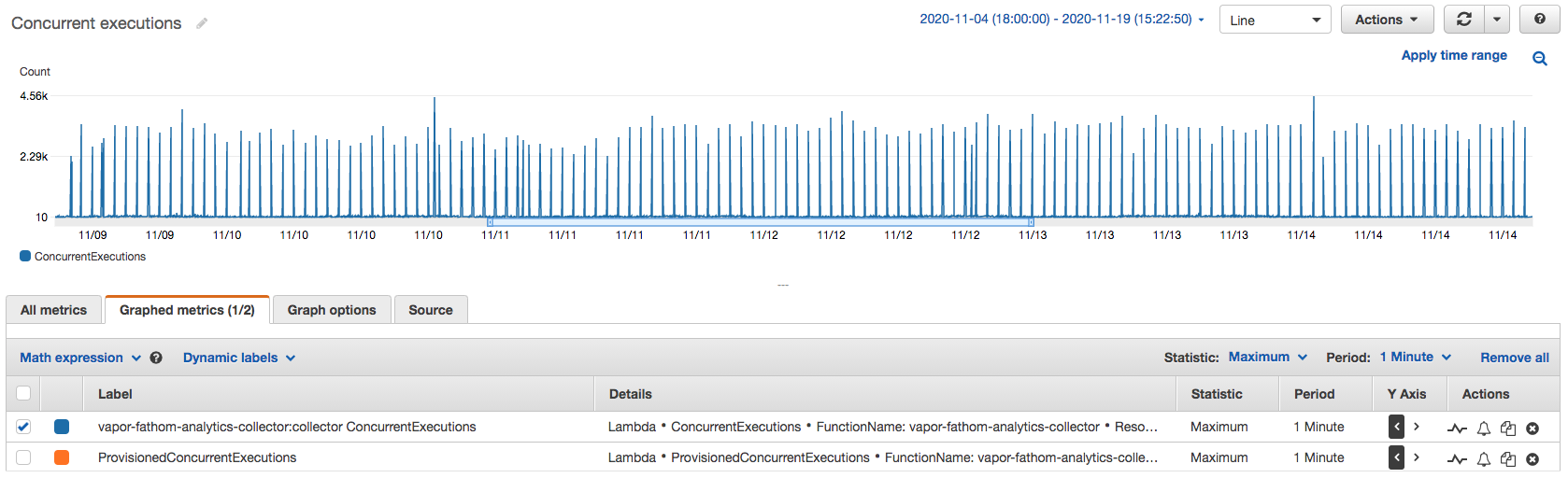

On the 5th of November, we started getting hit with a few thousand concurrent connections. It ranged from 3,000 - 10,000 concurrent connections at any one time. To put this into real terms, 3,000 concurrent connections would be the monthly equivalent of around 79 billion page views.

We thought this was strange, and we knew it was a spam attack, but our system was able to take it. The main downsides of the spam attack were that it created a significant backlog and put spam on targeted customer’s dashboards. At present, we put all incoming page views into SQS (an infinitely scalable queue system), and we process them with a limited amount of “queue workers” to ensure we don’t overload our database. Well, because we were receiving many thousands of additional concurrent requests, our workers couldn’t keep up, and a backlog started. So I had to write some code to express-process the backlog.

This attack happened on the same day that I watched a video link of my nan’s funeral. All I wanted to do was spend time with my daughter but the attack meant that I had to be on my computer from morning to night. This day was so stressful and I was emotionally wiped.

Fortunately, the attack stopped and I was finally able to go to bed.

6th November

I woke up the next day and things were looking good. We still had a backlog that we were playing catch-up with but only 1% of our customers had data delays.

However, around 13 hours after the previous attack had ended, round two began. This attack was smaller than the last one. It went on for 2 hours, then took a 45-minute hiatus (the computers must have needed a tea break), and then shifted into a pattern where they sent around 2 attacks per hour for 4 hours.

Once that was done, we thought things must be over. They’d attacked us on & off for multiple days, they’d done some damage and surely it was time to move on to the next target.

The next 24 hours were totally (and blissfully) silent.

8th November

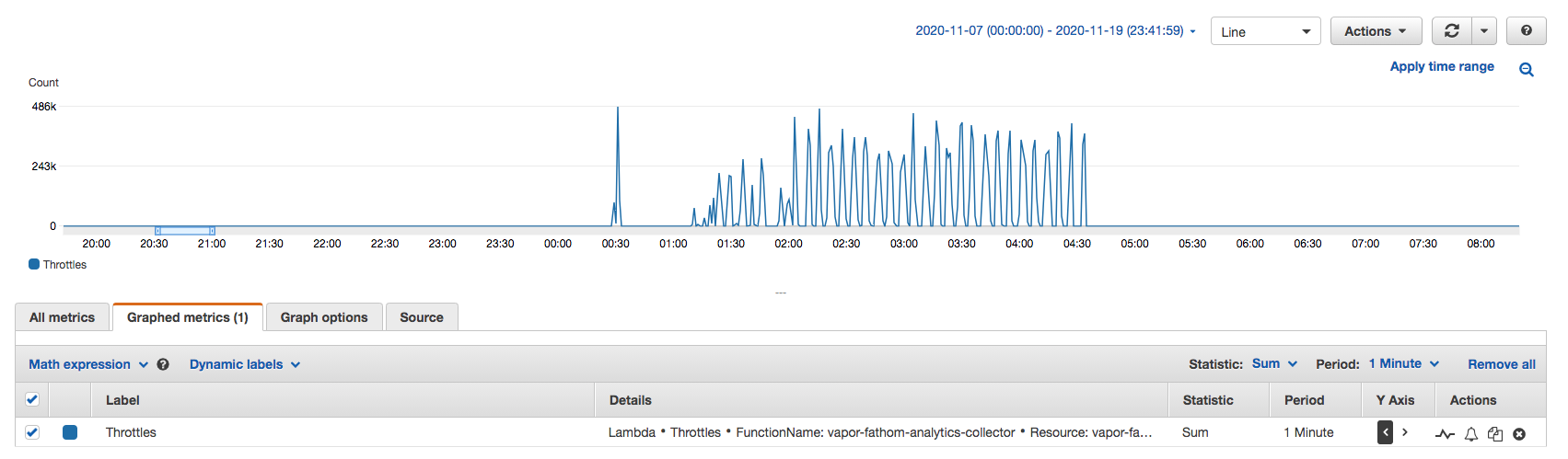

On the 8th of November, the attacks returned. They were the same as before, but they sent far more traffic, and we were throttling on/off as we were hit with up to 500,000 throttles (traffic rejections) for nearly 4 hours, along with 8,000 concurrent executions being processed.

At this point, we were still playing catch up with our backlog, so this wasn’t a welcomed contribution.

There wasn’t much we could do but sit back and wait. Then the attack stopped. 21 hours of silence. Was it over?

Calm before the storm

On the 9th of November, things started to get weird. The attacker started sending us 3,200 concurrent spam requests at the start of every hour, 24x7.

Fortunately for us, the attack was incredibly predictable, and we had already deployed version one of our spam protection system. The attacker flooded us with spam at certain minutes of every hour. So we responded by tweaking our algorithm to be more sensitive during anticipated attack times. And outside of a few outliers, it worked incredibly well. We finally had our first win.

We had made an important mental switch from “this sucks” to “we can fight this”. We had so many ideas for how we could defend against spam that we decided we weren’t going to pull the trigger on any kind of DDoS protection service, we could just handle it ourselves. But we were wrong.

Breaking point

It was the 15th of November and the intermittent attacks were still running every hour, 24x7. We had been through an exhausting week but we felt comfortable with our mitigation technique. We could live with this.

We had decided that we were going to simply increase our lambda concurrency limit to 8,000,000 requests per second (800,000 concurrents) and handle the spam attacks using DynamoDB for various blocking techniques. Send us traffic that our algorithm considers to be spam? You’re banned. And whilst we knew it seemed like an extreme solution, we were going to give it a try.

I decided to take a break from it all. I was wiped. Not only had we been victims of DDoS spam attacks all week, but I’d also had to learn so much about an area I’d never needed to care about. There was so much information to take in.

I had dinner with my wife and we sat down to watch a movie together. After being an absent husband and father for the last 10 days, I was excited to spend some time with my wife. And then my phone went off. We were under attack again. I told my wife that I’d be right back.

I jumped onto my computer and I could see that the attacker had ramped things up. Unsatisfied with the damage they’d already done, they were attacking us on a Saturday night, taking our service offline.

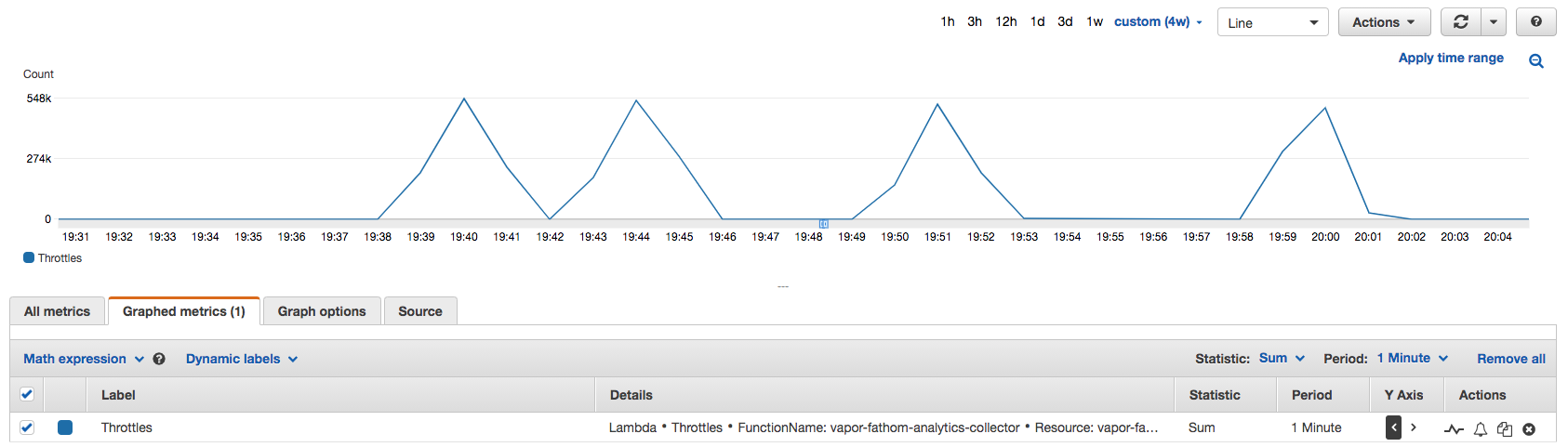

We were now receiving hundreds of thousands of concurrent requests again. All we could see in our metrics were pyramids. These pyramids show multiple waves of 500,000 throttles per minute. For the non-nerds who still aren’t too sure what a “throttle” is: we run our application without servers that we manage (serverless). Because we do this, we get to choose a sensible “maximum concurrency” setting to avoid overspending and various other things. And up until this attack, we’ve always been heavily over-provisioned, not coming anywhere close. And keep in mind that we already provisioned concurrency to handle billions of page views each month. But we didn’t expect to receive hundreds of thousands of requests in a single minute.

Imagine that we called in a favour, and Justin Bieber tweeted out the Fathom website to his 113,000,000 followers. Imagine that, within 10 minutes, every single one of his followers clicked the link (because, hey, who doesn’t love privacy-first analytics). That might be equivalent to 18,833 concurrent connections (assuming we took 100ms to process each request).

During this attack, there were moments where we were being sent the equivalent of 26 Justin Bieber Tweets per minute (JBT/m).

Fig 1. Lambda Throttles, grouped by minute

Fig 1. Lambda Throttles, grouped by minute

Needless to say, this attack took Fathom offline intermittently, and we figure that Fathom was offline for a significant chunk of time.

This attack lasted 4 hours. It was the biggest attack to date. I was on the phone with AWS Support for the majority of the time. By the time the dust had settled, it was 11 PM. My wife was in bed and my evening with her was gone.

I was mad. And we had reached our breaking point as a company. We knew we had to bring in the big guns. We’ve put in so much work to build our small business and we were not going to let this attacker destroy us.

Fighting back

We were done with passively accepting these attacks. We’re in a new world where these attacks are only going to get worse, so we must respond to them as aggressively as possible. Fortunately, we are two highly obsessive individuals. Along with new ideas coming to us every single day, numerous people have reached out offering ideas and their assistance. Knowing the world is on our side feels fantastic.

The first few attacks left us feeling very demotivated. They were frustrating and we were clueless. Fifteen days later, we are armed with so much information, one of the world’s best DDoS mitigation teams, huge plans, and the ambition to build one of the world’s most sophisticated spam detection systems.

We will not let a lonely nerd attack our business and spoil our customers’ analytics data. These attacks have filled us with so much motivation for fighting back, and that’s exactly what we’re going to do.

What’s happening now

Off the back of these attacks, here’s what’s happening at Fathom:

- We have introduced Version 2 of our spam detection system. When we launched Version 1, it was a touch too sensitive and threw up some false positives. But Version 2 is already performing wonderfully and will add resilience to our system.

- We now have 24x7 access to the AWS DDoS Response Team. We have access to their pagers and can have a highly specialized engineer help us mitigate DDoS attacks. The only downside of this is that we need to keep access logs (IP & User-Agent, no browsing history) for 24 hours to allow pattern detection in the event of an attack.

- We are building a 30 point system to help us measure the probability that a page view is spam, making it easy to detect and remove. It’s a privacy-first solution, and it includes some concepts that we didn’t know about 14 days ago.

- We are diving deep into machine learning in PHP and will be bringing that to the table as one of the 30 points mentioned above. I’m super excited, as I now have a reason to use it. This will lead to product improvements throughout Fathom in other areas too.

One of the areas I’m excited about is that we can use Laravel to detect spam and then we can block the attacks at the “edge”. It’s hard to believe that this was stressful 14 days ago. It now feels like a fun challenge, and we are obsessive when it comes to a good challenge.

How much did the attack cost us?

This is the question everyone has been thinking about since they started reading this article. So I’ll share the financial damage caused by this attack:

- AWS Lambda:

$3139 - API Gateway:

$865 - Database Upgrades:

$560(this will only be in place for about another week as we’re moving to DynamoDB & Elastic Cloud) - AWS Shield Advanced (prorated):

$1500

Total: $6,064

Despite the significant cost to our business, the ROI of this attack is in the 7 figures (in terms of future value). Without this attack, we wouldn’t have started to build our sophisticated spam detection system, which we hope to roll out in a few months. Additionally, we wouldn’t have various protections that we now have in place. So while it was annoying to go through, we’re so glad it happened.

“What stands in the way becomes the way” - Marcus Aurelius

Why didn’t we speak with Cloudflare?

Let’s address the elephant in the room. Why didn’t we speak with Cloudflare for help with the DDoS attack? After all, they have a ton of experience with this kind of thing.

To put it bluntly, we’re not interested in using them, even if they’re “free”. They're competitors of ours and it's just not a good fit. For the record, Cloudflare has a wonderful, talented, and friendly team. I’m not saying you shouldn’t use Cloudflare, I’m simply explaining why we won’t. Additionally, we have 24x7 phone support & cost reimbursement with AWS whilst Cloudflare don't offer that unless you use their Enterprise plan, which is around the same price as AWS Shield.

$36,000 & my call with Fola

I don’t know anybody who has signed up for this $3,000/month service from AWS… it’s called AWS Shield Advanced. The big value of this service to us is that we have access to some of the world’s best DDoS mitigation experts. In the event of an attack, we can page them, and they’ll help us mitigate the attack, creating firewall rules, identifying bad actors, and offering advice. So instead of just two of us responding to DDoS attacks, we have genius engineers we can speak with, and that feels good.

I’ll quickly do an overview of their features for those who are curious before I tell you if I think it’s worth it so far:

- Proactive event response - The team will contact us if they see an issue.

- DDoS cost protection - If our protected services have to scale up to absorb the attack, AWS will issue us with credits in response to the attack. My understanding is that, in this scenario, we would’ve received $6,000 in AWS credits in response to this (don’t quote me on that, I could be wrong).

- Specialized Support - The DRT will help triage the incidents, identify root causes, and apply mitigations on our behalf.

I don’t want to turn this into an advert for AWS Shield Advanced but that’s a quick overview of the service.

On Friday, Nov 20th, I spoke with a member of the DRT. It was an incredible experience. The chap’s name was Fola. And despite me starting the call by guessing his accent incorrectly (he’s Nigerian, sorry Fola!), he was very pleasant and patient with me. He spent so much time ensuring I understood everything and it was surreal to have someone that skilled reviewing our infrastructure.

On the call, I was asking Fola about the best course of action. Should we “shadow ban” and let the attacker think they were getting through when they weren’t. I was operating on the premise that, if we blocked the attacker, they would get angry, scale-up, and keep attacking us. Fola responded with a short sentence that changed everything for me:

“Attackers don’t have unlimited resources.”

It seemed so obvious but I needed to hear it from a professional. He then explained to me how attackers performed the attack, what they’re looking to do, and the best way to respond. He told me that we must block them at the edge and protect our core application. It was a huge shift for me because it now felt like we had so much power. The attacker hasn’t got an unlimited botnet. Even if they had 1,000,000 computers under their control, we can block them. If they bring 1,000,000 more, we block 1,000,000 more. And these attacks cost them. They are looking to get bang for their buck. If we are returning 403 errors to their botnet, they’d be foolish to waste their money.

One final thing I want to highlight about the call with Fola was his reaction to me saying that we can’t keep detailed access logs. I explained to him that we are a privacy-first website analytics company, and we don’t want to keep browsing activity by IP, as we wouldn’t be able to sleep at night. We have our access logs set-up so that information like the query string, referrer, etc. is redacted, making the logs completely useless for compiling browsing history but fantastic for blocking spam attacks. I expected him to push for more data, but he calmly said that security and privacy are their number one priority and that they’d never push us into something we’re not comfortable with.

After my tweet thread about this attack, Jeffrey Lyon (Head of Engineering - AWS Shield) reached out to me, and I was soon in an email chain with various product managers and heads of department. Everyone wanted to help and hear feedback from us. I am seriously so impressed with AWS. This isn’t the first time I’ve been contacted on Twitter by them and connected to heads of various products. Their community engagement is incredible.

The showdown

On Saturday 21st of November, we were hit with another huge attack. Saturday at midnight UTC is the attacker's favourite time to target us. But it was different this time:

- We had AWS Shield Advanced enabled

- AWS Shield knew our “baseline” and could identify big spikes (attacks)

- We had good firewall rules in place

The attack was gigantic but it only peaked at 305,000 concurrents (200k less than last weekend) thanks to our new rate-limiting configuration. However, we still had to escalate it to the DDoS Response Team (DRT).

I emailed their Head of Engineering (who happened to be online) and he escalated things, but I also remembered that we have a Lambda function, which we call the "DRT Bat-Signal", which creates a support ticket & pages the DRT team at the press of a button, and things kicked into action.

Within no time, I was in a call with Michel and John (a member of the DRT who specialized in Layer 7 DDoS attacks). He was already parsing our access logs and looking for patterns. This was going to be a good test to see how useful these privacy-friendly logs were. After all, we redact a whole bunch of useful information from our access logs for privacy reasons.

John was busy scanning tens of millions of logs. I was talking his ear off with my ideas and he patiently listened. I came up with a few ideas but none of them would work. Meanwhile, John was compiling lists of offending IPs, sending them to me to block on the firewall, and it was working nicely. But the attack was still coming.

John then identified a pattern in all the IP addresses. I’m sure I could share more details here but I’m reluctant to, so I’ll leave it to your imagination. John brought in 2 members of staff (Karan & another chap named John) from 2 different teams, along with another chap on the phone to help.

The attack was the same as last weekend. This same attacker has been after us for 3 weeks. But this time, we had 6 people from AWS fighting for us. It was exhilarating.

The dream team went through all kinds of escalations & approvals and was able to block all requests that matched this pattern. It was truly incredible, and the level of care we got was remarkable. AWS is unbelievably driven & organized when it comes to addressing attacks & abuse. If I had to deal with this attack by myself, there’s no way it would’ve been solved this quickly. This AWS Shield Advanced service provides everything they promised but the biggest value is that the DDoS Response Team advocated for us. They handled all the escalation, nothing was too much for them and it felt so good having them on our side. Once the pattern block was put in place, the attack stopped. Just like that.

When the attack was over, John finished his shift, and guess who replaced him... It was Fola! At 8 AM on a Sunday (his timezone), he had the joy to jump into the drama. He joined just in time to watch the attack finish, and then he spent time evaluating the metrics with me. He made various observations on the data we had and gave me an incredible plan that will allow us to automatically block these kinds of attacks in the future. I can’t talk too much about the plan because I don’t want to reveal any of our protection techniques but it's going to help us a lot.

I spoke to Paul shortly after the attack and we laughed that this is the biggest recurring business expense that either of us has ever had but it’s easily one of the best investments we’ve made.

This won’t be the last attack against us. Someone will try to destroy our business again.

But now we have an army on our side.

Looking for some Laravel Tips? Check out Jack's Laravel Tips section here.

You might also enjoy reading:

BIO

Jack Ellis, CTO + teacher

Recent blog posts

Tired of how time consuming and complex Google Analytics can be? Try Fathom Analytics: